A Multidisciplinary Approach for Developing Two Bespoke AI Tools to Support Regulatory Activities Within the MHRA Clinical Trials Unit

Kingyin Lee (MHRA), Greg Headley (Informed Solutions Ltd), David Lawton (Informed Solutions Ltd), Andrea Manfrin (MHRA)

HIGHLIGHTS

Clinical trials are complex for several reasons, including scientific, regulatory, logistical, and ethical challenges.

We have identified an opportunity to develop two bespoke AI-driven solutions to support regulatory assessments of clinical trials.

The creation of these AI tools will support the assessment of the clinical trials, improving efficiency, accuracy, and consistency, during the analysis of the large volume of data, providing greater transparency, regulatory confidence and public trust.

This paper presents the journey we went through with an incredible mix of extremely talented people, from the conceptualisation stage to the creation of two AI tools that the MHRA Clinical Trials Unit will utilise.

Introduction

1.1 The Importance of Clinical Trials

Clinical trials are systematic research studies conducted in humans to evaluate the safety, efficacy, and optimal use of medicines and healthcare products. They represent a critical step in the development of evidence-based medicine, providing the rigorous data necessary to support regulatory approval and inform clinical practice. By adhering to predefined protocols and ethical standards, clinical trials help ensure that new treatments are both safe and effective before they are widely adopted. They are essential in advancing medical knowledge, protecting patient health, and maintaining public trust in healthcare systems.

1.2 The Assessment Process – Current Challenges and Future Demands

Clinical trials are generally regarded as the gold standard for evidence-based medicine, supported by a complex set of timelines and dependencies in the clinical development of medicines from discovery to authorisation.

Sponsor organisations that submit applications to obtain authorisation to conduct clinical trials often face significant time pressures, driven by the benefits of being first to market and the imperative to improve patient outcomes through promising treatments keeping patient safety as the main priority. Clinical development can range from 5 to 20 years, with typical timescales of 10 to 15 years1.

Over the last five years (since the start of the pandemic), the median clinical development time for innovative medicines for infectious diseases has been estimated at approximately 7.3 years, from first use in humans to market authorisation. This is supported by innovative new approaches to planning and conducting trials (e.g. adaptive protocol designs and decentralised trials).

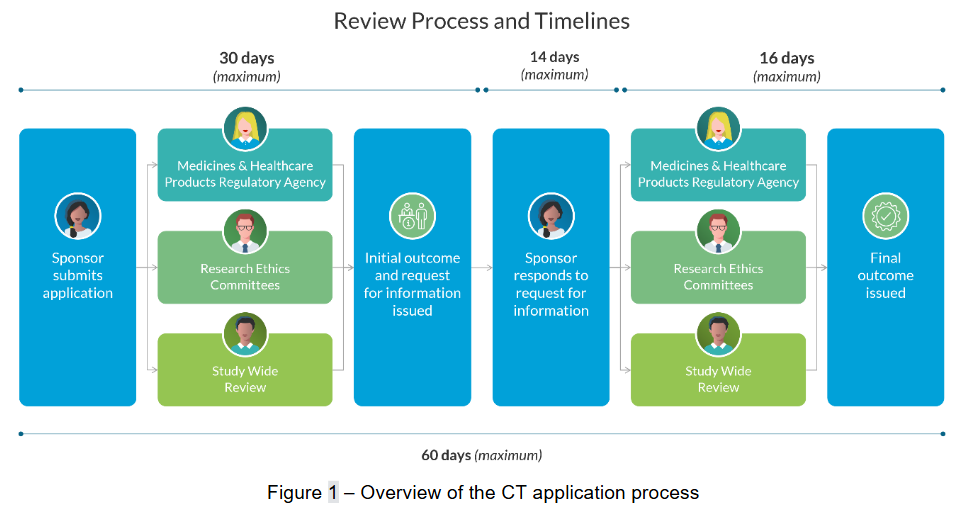

Medicines regulators recognise the need for reliability and consistency in the assessment timelines for Clinical Trial Authorisation (CTA) to facilitate innovation. Last year, more than 5,000 applications were assessed by the UK’s Medicines and Healthcare products Regulatory Agency (MHRA) Clinical Trials Unit. The work required to assess these applications is very time-consuming, and with the rise in adaptive and complex innovative clinical trial design, assessment times are set to increase further. Typically, assessment involves extensive re-reading of complex documentation to extract key information, reviewing responses to requests for information (RFIs), and cross-checking statements and justifications by subject matter experts. This process must occur within tight timescales, involving multiple organisations and stakeholders, as shown in Figure 1 below.

Data from the MHRA indicates that most initial clinical trial applications will trigger questions from assessors - formally known as grounds for non-acceptance (GNAs), which sponsors must address before trial approval can be granted. This highlights a significant opportunity for assessors to provide scientific advice that could improve the quality of CTA applications. However, assessment teams currently spend much of their time reviewing these applications, many of which are not approved due to common GNAs, as well as meeting other demands across the Clinical Investigation and Trials (CIT) division.

This situation is expected to be compounded by new regulations coming into effect on 28th April 2026, which will shift critical time pressures away from sponsors and onto the regulator. In response, the MHRA needs to scale the capabilities of the CIT division, building the necessary capacity and flexibility to assess significantly greater volumes of work within demanding timescales, without compromising quality, ensuring patient safety as its priority.

1.3 The potential for AI

As quoted from the summary letter by Lord Darzi to the Secretary of State for Health and Social Care on 15th November 2024: “There is enormous potential in AI (Artificial Intelligence)2 to transform care and for life sciences breakthroughs to create new treatments”. Since the popularisation of personalised AI, such as ChatGPT, in 20223, all stakeholders are looking to rapidly develop their own AI in various applications. Stakeholders have applied AI in many areas4,5 such as the process of drug discovery, predicting safety and efficacy, trial design, and recruitment and retention6.

The MHRA has adopted an innovative, industry-leading approach to exploring the potential of AI in a responsible and risk-proportionate manner, which aligns with the MHRA Data Strategy published in September 20247. In 2024, MHRA’s CIT division identified several potential partners to help develop an AI capability to support clinical trial assessments. However, initial discussions identified that the AI providers engaged did not understand the specific needs of MHRA’s regulatory team, and existing tools didn’t address the breadth and depth of MHRA’s requirement or have proven capability working at scale in safety-critical environments.

Seeking a more collaborative approach, MHRA partnered with Informed Solutions, an organisation that provided a combination of AI expertise, User-Centred Design approaches and experience working with deep subject matter experts to deploy AI in demanding regulatory environments. The focus of the partnership was to deliver AI solutions to meet MHRA’s specific regulatory needs.

This paper outlines the recent work delivered by MHRA in partnership with Informed Solutions, which aimed to develop and deploy AI-enabled tools to support clinical trial assessors in managing increasing pressures, including higher workloads and shorter response times for CTAs. Informed Solutions applied a novel AI Readiness Assessment method to build a deep understanding of how MHRA’s assessors work, mapping the data available and assessing its suitability for use by different AI techniques.

This approach rapidly developed an evidence-based view of how AI could be deployed responsibly to meet the needs of assessors and MHRA’s wider business requirements. At critical milestones, key decisions on which potential solutions to prioritise were based on targeted proof-of-value exercises and insights from user research, maximising the return on investment that could be delivered within the limited time and funding available, resulting in the successful design, development and deployment of two novel AI-enabled tools to support the CIT division.

Methods

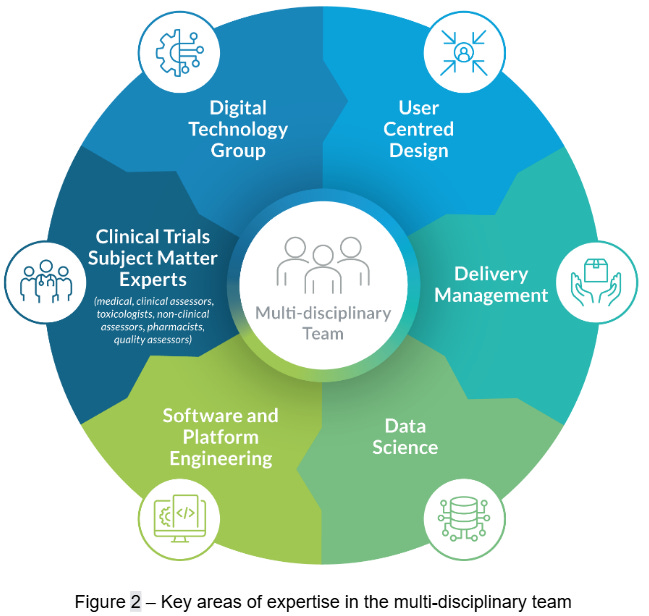

To deliver targeted AI innovation in a responsible manner, we drew upon a diverse team of experts from both MHRA and Informed Solutions. The MHRA contributed clinical trial subject matter expertise, as well as software engineering and architecture capabilities from their Digital Technology Group (DTG). These capabilities were complemented by Informed Solutions’ strengths in software engineering, technical architecture, data science, delivery management and user-centred design skills. Together, this multi-disciplinary team (Figure 2) was able to rapidly identify and qualify opportunities for AI enablement and translate them into operational digital solutions.

This work aimed to improve regulatory effectiveness by addressing four key domains: people, data, technology, and business. We assessed each domain to understand the existing landscape and develop targeted interventions to improve productivity, consistency and satisfaction, as set out below:

The people domain focused on the tasks completed by expert clinical trials assessors, the pain points in their workflows, and maximising end-user value.

The data domain assessed the quality, availability, governance structures, and compliance requirements of the data assets involved in clinical trial submissions, assessments and associated regulatory documents.

The technology domain reviewed infrastructure capabilities, scalability, integration with existing systems, and security needs.

The business domain explored practical AI solutions that could support decision-making, streamline processes and workflows, improve assessment consistency and boost user satisfaction.

We organised project delivery into two phases: discovery and productisation. The discovery phase built a strong, cross-domain understanding and pinpointed the most valuable opportunities for intervention. Then, the productisation phase applied a user-centred, iterative approach to turn those opportunities into operational solutions, securing user buy-in and keeping business value at the forefront of design.

2.1 Discovery Phase

During the discovery phase, our goal was to gain a comprehensive understanding of MHRA’s clinical trial authorisation processes, data and technology landscape. To support this, we conducted an AI readiness assessment of the CTA process. This assessment unpacked the ambitions of the MHRA and developed our understanding of readiness across the four aforementioned domains.

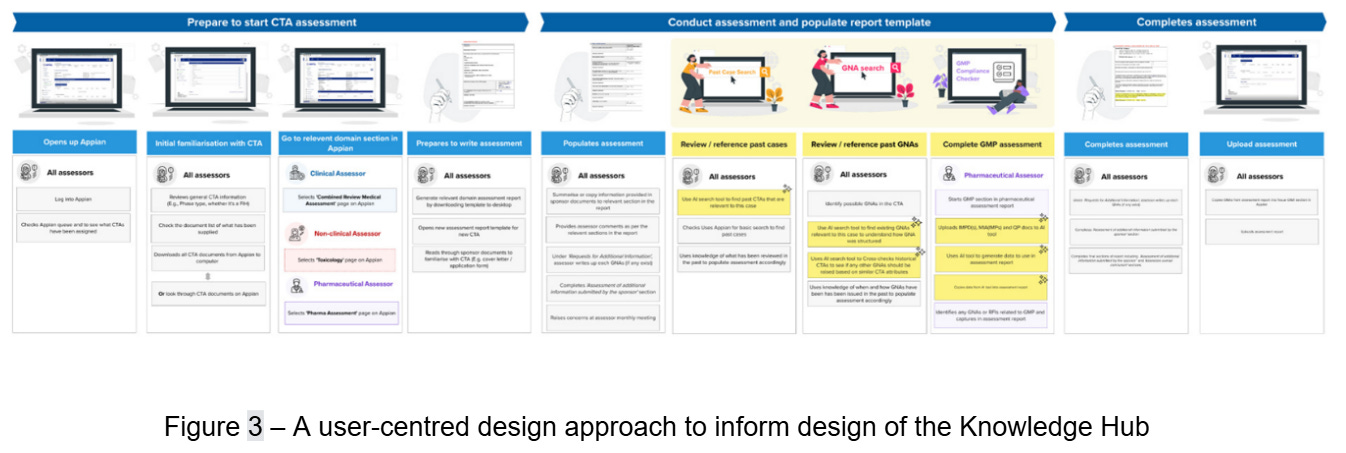

We placed user-centred design at the heart of the discovery phase, drawing on extensive user research and business analysis. Working with key stakeholders, we mapped processes and pain points through targeted workshops, which revealed essential insights into workflows, roles, and interdependencies within the CTA process (Figure 3). We documented operational challenges, trust factors, and business priorities, alongside potential benefits, to inform solution design.

At the same time, our team probed the CTA process to gain a thorough understanding of the data involved across workflows. We clarified the scope, quality and structure of critical data assets, including CTA documents, internal guidance, and historical responses. In parallel, we unpacked existing data governance procedures to understand their structure. Given the regulatory environment and safety-critical nature of CTA, we paid particular attention to commercial and intellectual property, personally identifiable information, and compliance requirements.

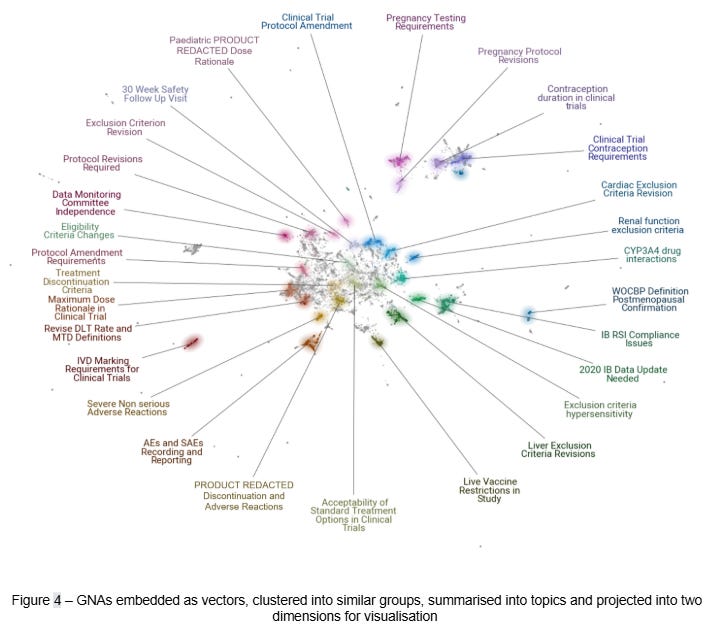

Building on our understanding of the user and business contexts, we shifted focus to technical exploration and the evaluation of suitable AI techniques. This included the use of text embeddings for topic modelling (Figure 4) and natural language processing methods to identify patterns in regulatory documents. Specifically, we used text embeddings to analyse GNAs across both structural and semantic dimensions. We also examined common CTA documents, including protocols, investigator brochures (IB), application forms, and good manufacturing practice (GMP) certificates. This analysis ultimately confirmed the suitability of existing data holdings to support process improvement and automation in live operations.

At the conclusion of our analysis, we identified a range of viable options utilising AI techniques, including intelligent document processing, predictive analytics, and generative AI. Engagement with clinical trial assessors helped us determine which options offered the most value and which were unlikely to be feasible within MHRA’s operational constraints, timelines, and budget. We quickly ruled out fully generative approaches: the trial authorisation process requires critical scrutiny of detailed documents, and even the most advanced large language models (LLMs) cannot be relied on to generate accurate information consistently. We also found that predicting nuanced, context-specific GNAs in a fully automated way exceeded the scope of this initial project.

As we eliminated some options, others stood out as candidate deliverables to take forward to productisation. Specifically, we identified three solutions to develop into proofs-of-concept: data-driven guidance to trial sponsors, intelligent GNA search using natural language queries, and automating the validation of GMP compliance.

2.1.1 Data-driven Guidance for Trial Sponsors

Our first solution aimed to reduce rejections and delays in the CTA process by providing more insight into GNAs. We achieved this by converting free-text GNAs into text embeddings8, a modern natural language understanding technique pioneered by the transformer architecture9. Once transformed into this embedding vector space, GNAs were clustered into topics (Figure 4). The topics with many members and coherent themes were selected for further analysis. Our team then developed these candidates into updated guidance that is provided to sponsors ahead of the CTA process. This data-driven approach helped to aligned guidance with the most common issues prompting GNAs.

2.1.2 Intelligent GNA Search using Natural Language Queries

Our second solution addressed a common task in trial assessment: reviewing the rationale, structure, and language of GNAs raised in previous applications. A fundamental requirement for the MHRA is to provide consistency in trial assessment. This means that any two GNAs raised for the same reason should have uniform rationale and language. To achieve this, trial assessors must often spend significant time locating and reviewing historical GNAs to understand best practice and precedent.

Identifying and reviewing historical GNAs is a manual, time-consuming activity, complicated by multiple information sources. These can include tacit internal knowledge, business records and unstructured documentation spread across systems. To address this, we developed a domain-aware query tool which enables assessors to interrogate previously raised GNAs using natural language.

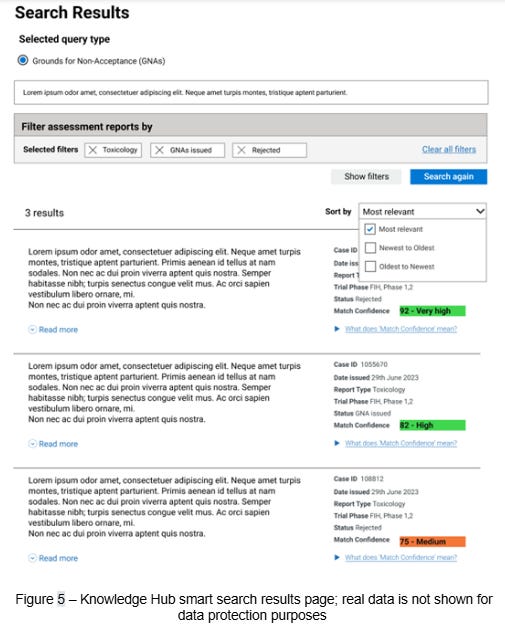

This tool is underpinned by the same text embedding methodology10 used to cluster the GNAs in topic analysis. First, GNAs are converted into fixed-length vector representations using an embedding model. These vectors are then stored in a vector database, which is optimised for vector comparison. This means an assessor can submit a query in plain language to the search engine, which is then converted into the same fixed-length vector representation as the GNAs already in the database. This query vector is then compared against the database to retrieve GNA vectors which are most similar. The results are inverted back to plain text using the embedding model and presented to assessors in a convenient interface we call the Knowledge Hub.

2.1.3 Automating the Validation of Good Manufacturing Practice Compliance

Our last solution streamlines the essential but laborious task of GMP validation. Any investigational medicinal product (IMP) or placebo being used in a clinical trial must satisfy the standards of good manufacturing practice. Sponsors submit relevant documentation with their application, which the MHRA must then validate. Verifying this information involves examination of manufacturing declarations made by the sponsors, which must be validated against the sites and activities approved by regulators.

To improve this process, our solution automates both the document review and verification processes using a set of fusion models that combine text and computer vision neural networks11. We fine-tuned these models by example, training them to extract the specific GMP content required for verification. In the case that our fine-tuned models fail to extract the required content, a large language model is used to parse the text directly and return the desired content.

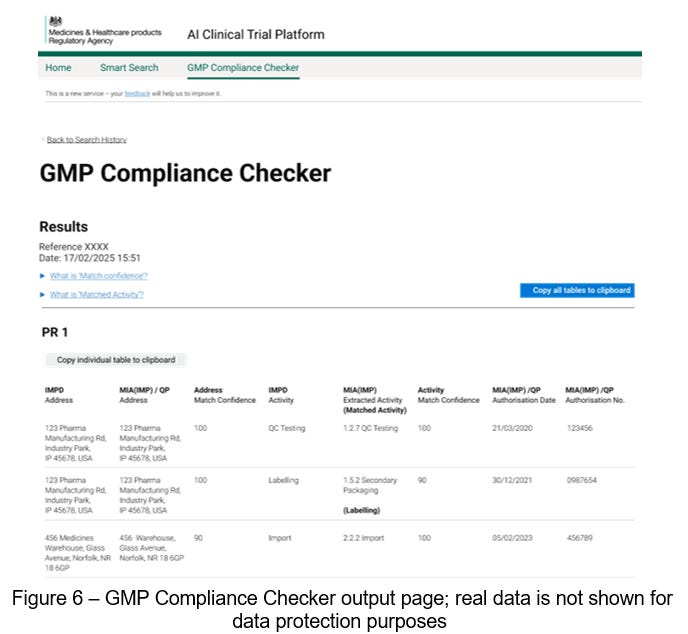

We then built a verification algorithm that matches the content extracted from application documents with regulatory certificates, proving that a given site is certified for GMP. This verification result is presented, alongside a confidence score, back to assessors for review in a tool we call the GMP Compliance Checker. This human-in-the-loop layer is essential and ensures that expert assessors are the decision makers.

2.2 Productisation Phase

To bring practical value to the clinical trials team, we converted each of our proofs-of-concept into production services. To scale up the GNA search and GMP validation solutions, we focussed on reliability and designed practical, repeatable workflows. These solutions were optimised for rapid processing, consistent performance, and effective management of the extensive historical GNA and GMP datasets.

Another key factor in moving from technical proofs-of-concept to production-grade solutions was ensuring effective user experience (UX). This involved designing intuitive interfaces that integrated seamlessly into assessors’ day-to-day work to improve productivity. Our user-centred design experts developed and iterated designs based on UX best practices. We validated these designs through operational testing and refined them using evidence-based feedback to ensure they met user needs, business requirements and operational constraints.

To be deployed into the MHRA’s live environment, each solution needed to meet DTG’s technical, security, governance and architecture standards. During the productisation phase, we worked closely with DTG experts to assure technical design, complete formal testing, and conduct independent security reviews. Each solution was deployed in a secure, isolated environment using strict role-based access controls (RBAC), so that only clinical trial assessors could access data and service outputs. This approached maintained compliance with data protection and intellectual property requirements, reinforcing the trustworthiness of all solutions.

Overall, our methodology embedded AI-driven enhancements effectively within clinical trial authorisation process, combining technical innovation with user-centred design and responsible data practices. It was structured to uphold ethical standards, protect sensitive data and support assessors in working more productively ultimately contributing to a more effective and resilient regulatory process.

Results

Before the end of 2024, the CIT division, in collaboration with Informed Solutions, concluded a proof-of-concept study to support the assessors in their CTA activities. This study resulted in updated guidance to trial sponsors and the creation of two AI-enabled software solutions, which are presented below. These products were selected for initial development based on their potential to rapidly deliver return on investment and ability to build trust with end-users.

Our approach demonstrated that, with the correct method and expertise, it was possible to design, develop, and deploy an AI solution into a clinical trials environment. Moreover, our solution complied with DTG standards, considered all user needs, and delivered measurable improvements and efficiencies to the process. A user-centred design approach was central to this success. It helped build trust and confidence in the tools and supported adoption, dispelling the myths that AI is challenging for users and difficult to scale beyond proof-of-concept.

3.1 Data-driven Sponsor Guidance from Topic Modelling

During the discovery phase, topic modelling was initially used as an analytical tool to understand the nature of GNAs better. By evaluating its outputs, we identified two practical use-cases. The first informs the published sponsor guidance on common GNAs by deriving insights directly from the clustering of GNAs into topics. This approach enhances the existing, experience-based guidance with concrete, data-driven insights.

During this exercise of topic modelling, the CIT division took advantage of the opportunity to review the 110,000+ GNAs and compare them with the current website guidance (Common issues identified during clinical trial applications - GOV.UK). It confirmed that the majority of common issues listed were the same, thus validating the information. In addition, it highlighted several common issues not identified before. These are now being drafted for the next update to this MHRA webpage. This demonstrates the research benefits of developing AI, leading to a quantitative review and an enhancement of existing systems.

3.2 Knowledge Hub: Enhancing CTA Efficiency and Consistency

Assessors at the MHRA currently face challenges in efficiently accessing historical GNAs and prior clinical trial case data. This stems from the limited search functionality across existing records, which can delay decision-making and reduce consistency across assessments.

To address this, we developed our second use-case derived from the topic modelling work: a Knowledge Hub of historical application data, which represents our first AI tool. This idea emerged from recognising that the text embedding process used in topic modelling had standalone value. It encoded the structure and meaning of regulatory text, making it easily searchable. The resulting Knowledge Hub is a centralised, queryable database of historical GNAs and assessment reports from closed clinical trial applications.

The Knowledge Hub gives assessors access to actionable historical context, strengthening the quality, consistency, and replicability of regulatory oversight. By improving how prior decisions can be surfaced and referenced, it supports more informed and efficient clinical trial assessments. In providing an intuitive and efficient interface (Figure 5) the service offers an entirely new pathway for assessors to access and understand historical information.

3.3 GMP Compliance Checker

As part of the CTA process, sponsors must submit mandatory documents detailing the manufacture of any investigational medicinal products (IMPs) included in the trial. These documents include both the sites involved in the manufacturing process and the activities each site has been approved for by regulators. Previously, pharmaceutical assessors at the MHRA had to manually review these documents to ensure compliance before authorisation of a trial.

With the introduction of our second tool, the GMP Compliance Checker, this verification process is streamlined. Instead of manually reviewing and cross-referencing what can be dozens of documents, assessors now submit the relevant documents to this solution. Our fine-tuned deep neural networks review and extract the relevant GMP information and collate it into a review interface (Figure 6).

The value of this tool is evident in a reduction of up to 60-fold in the time required for GMP validation. For human review, the time taken to verify GMP compliance scales with the number of IMPs involved in the trial, as each one requires manufacturing declarations. The GMP Compliance Checker dramatically reduces the time needed to validate each of these, meaning that efficiency gains scale proportionally to the number of documents needing review.

For a conventional trial application with numerous IMPs, the manual verification process can take up to two hours. Benchmark results from development of the GMP Compliance Checker indicate our automated solution can complete this verification step in less than 60 seconds, equating to more than 99% time-savings. This speed up allows assessors to concentrate their efforts on reviewing the results of the solution, reducing errors and improving consistency.

4. Discussion

The process of clinical trial authorisation requires attention to detail, deep subject-matter expertise, and the methodical application of regulations. These traits do not immediately seem to favour AI technologies, which are stochastic by design. Yet we have demonstrated that there are opportunities for AI to be applied judiciously, offering gains in productivity, consistency and satisfaction for the assessors charged with ensuring that new treatments are safe and effective.

Effectively introducing AI technologies to the CIT division required strict adherence to the governance procedures of the MHRA. Any solution also had to fit into the existing technology landscape and respect the organisation’s security and data protection requirements. Critically, the needs of end-users had to be at the centre of design and development to ensure an effective solution with buy-in from users. All these factors mandated a multi-disciplinary team of clinical trial experts, user-centred designers, software developers, data scientists, and project managers to deliver valuable outcomes.

The new Knowledge Hub unlocks the value of years of experience and expertise by consolidating data into a shared tool that uses AI to organise and surface the most relevant information to experts. This allows experts to progress more quickly with cases and supports the upskilling of assessors by giving them access to a greater volume of high-quality knowledge. Our multidisciplinary team approach ensured that we developed effective products, with buy-in from stakeholders and users (CIT team & the wider MHRA DTG). By focusing effort on what could realistically be delivered within the available time and budget, we maximised value and de-risked productization. This allowed us to progress beyond the proof-of-concept stage, where many initiatives stall.

Initial estimates indicate savings of up to 180 full-time equivalent (FTE) days per year in the clinical trial assessments. By rapidly realising these efficiencies, assessors can redirect time away from search activities to higher-value tasks, such as providing upstream advice to sponsors. In turn, this strengthens sponsors’ applications, ultimately making them safer and faster to approve.

To address increasing case volumes and time-critical pressures at the MHRA, it was necessary to scale capability and adopt innovative, risk-controlled, user-centred AI approaches12. This did not come without challenges. For example, access to secure sandbox environments was initially limited, but MHRA’s organisation-wide commitment to innovation allowed us to leverage investments in secure and prototype environments. Another challenge was restricted access to data: sourcing datasets and obtaining approvals took significant time, impeding some proof-of-concept work. Leadership support was critical in overcoming this barrier, by providing assurance and direction across teams. This leadership was also instrumental in overcoming domain and technical challenges, by providing backing to make use of the latest techniques and technologies1.

4.1 Benefits of the two AI tools

Improved Efficiency: Rapid access to relevant historical decisions, reducing time spent searching fragmented records.

Consistency in Decision-Making: Aligns with past regulatory decisions to support harmonised and transparent assessments.

Enhanced Confidence: Equips assessors with data-driven insights to strengthen evaluations of new applications. This supports faster access to life-saving treatments, reinforces regulatory confidence, and demonstrates responsible AI design.

Skills Development: Accelerates the learning and development of new assessors by giving them immediate access to years of accumulated expertise.

Staff satisfaction: Reduces repetitive manual work (e.g. GMP Compliance Checker), enabling highly skilled experts to focus on higher-value tasks.

Streamlined review: Increases efficiency in the review and approval process, cutting lead times, reducing costs and errors—for example, GMP assessment times were reduced from 120-180 minutes to under 5 minutes (a 95% efficiency gain).

4.2 Testimonials

“From an end user perspective, being involved in the development of AI required users to really focus on what tools would be beneficial to the assessment of clinical trial applications and how these could be applied”.

“We were able to collaborate with colleagues, across various disciplines, to identify processes/tools that would be helpful, and we were also heavily involved in the visual layout of the applications and performed extensive user end testing”.

“This allowed us to gain first-hand experience and provide feedback on the functions that worked well and others that still required development, which is critical to ensure end user functionality.”

4.3 Lessons Learnt

This project was able to build trust with end-users through a heavily user-centred approach, leveraging AI in the most controlled and effective way to enable them to complete their tasks. A collaborative, multidisciplinary team approach allowed AI and Data Science skills transfer to MHRA staff, supplemented by the creation of written guidance and learning materials. The project ignited a passion for innovation within the CT team and across MHRA engineering and architecture (who were key enablers in achieving project success) and the desire to continue innovating.

4.4 Implications of The Knowledge Hub and GMP Compliance Checker for Future Practice, Policy and Research

Examining the Knowledge Hub specifically, we find a new tool that enables assessors to query the back catalogue of historical GNAs more quickly and easily. This empowers assessors with actionable context, strengthening the quality and consistency of MHRA’s regulatory oversight in clinical trials. The Hub serves as an indexed library of GNAs, continuously refreshed with new decisions. In doing so, it refines the consistency and reliability of new GNAs generated by assessors and may also support the development of case studies. This approach supports the standardisation of GNAs, ensuring that assessors adhere to a uniform review structure across all applications. It also provides structured guidance for issuing common GNAs, enhancing both consistency and replicability. Furthermore, regular updates offer ongoing training for both new and experienced assessors, allowing them to incorporate cutting-edge information more seamlessly into their assessments.

The GMP compliance checker represents a fundamental shift in the time required to validate GMP compliance. Compliance with GMP in clinical trials is crucial for ensuring the safety, quality, and integrity of investigational medicinal products (IMPs) used in human research. It minimises the risks of contamination, variability between batches, degradation or instability of active ingredients. This has a significant impact on regulatory activities because non-compliance may lead to a clinical trial hold or suspension, and eventually rejection of trial data, or legal or financial penalties.

Against this backdrop, the GMP compliance checker delivers significant value. By streamlining and structuring the validation procedure, it reinforces consistency in assessment while freeing up valuable expert time. Seen through this lens, we believe it could support the assessment of multi-centre or multi-national trials, which are extremely important because they can harmonise quality expectations across different countries, facilitate the import and export of IMPs, and increase stakeholder trust. If the results show promise, they might also impact the commercialisation of drugs. Additionally, GMP-compliant manufacturing further simplifies scaling to commercial production, reducing the need for re-validation and supporting faster global regulatory approval.

Our approach accelerated productisation by prioritising pathways that delivered the greatest value for the MHRA most quickly. Users were engaged throughout development, ensuring their needs were met at each stage of development and inspiring the CT team with a vision of what future AI solutions could achieve. The success of this project has built trust in new technology and established a roadmap for delivering further value through a collaborative, user-centred approach.

The outcomes of this project also have wider applicability. By enabling experts to access relevant information quickly and easily, the solutions developed here demonstrate how AI can support informed, timely decision-making across domains. This may help other regulators seeking to introduce AI into their processes. We have demonstrated that workflows that require greater consistency, or knowledge-intensive domains where experts spend substantial time reviewing complex, unstructured documentation stand to benefit.

5. Conclusion

This project led the teams through a challenging yet rewarding journey. The MHRA Clinical Trials Unit began by identifying a crucial problem: the need for assistance. Next, we searched for off-the-shelf products, only to realise that none were available. The collaboration between the DTG and the engineering team allowed us to unite additional groups, including assessors and external AI experts. This partnership transformed the work dynamic, fostering a shared purpose and enabling us to create customised tools that were not available on the market. The development of these two tools marks the beginning of a technological revolution that prioritises the individual, with technology designed to support and enhance our efficiency. We are establishing a new environment with a strong focus on patient safety, driven by the passion of our teams and integrated with AI technology. This innovative approach is creating an engaging regulatory framework that will facilitate the safe development and testing of new medications that have the potential to save lives.

This work has been conducted in full compliance with MHRA policy and governance, including assurance by the MHRA CIT team, as well as the MHRA DTG, and has been moved to live operation. The approach successfully identified products that would add the most value, doing so in a manner that built buy-in from expert end-users throughout. Also provided a forward view of other opportunities for adding value, which MHRA will pursue to benefit sponsors with topic model guidance directly.

Editorial work

Ana Demonacos

Acknowledgements

Informed Solutions’ Team

Data Science

Matthew Hawthorn

Michael Evans

Tegan O’Connor

Liam Horrobin

User Centred Design

Hope Bristow

Molly Northcote

Joely Roberts

Eleanor Cook

Meenal Prajapati

Delivery Management

Adam Horrell

Software Engineering

Ryland Karlovich

Jack Gallop

Harry Ryan

Adam Caldwell

Divya Singhal

MHRA teams

CIT (assessors)

Clinical: Ibrahim Ivan

Non-clinical: Rayomand Khambata

Pharmaceutical: Kulvinder Bahra, Jasminder Chana

DGT

Claire Harrison

Phil Gillibrand

Amy De-Balsi

SW Engineering

Sumitra Varma

Edar Chan

Conflict of Interest

There are no perceived or actual Conflicts of Interest relating to this work.

Ethics approval

Ethics approval was not required

References

1. How long does a new drug take to go through clinical trials? [Internet]. Cancer Research UK. CRUK; 2014 [cited 2025 Jul 22]. Available from: https://www.cancerresearchuk.org/about-cancer/find-a-clinical-trial/how-clinical-trials-are-planned-and-organised/how-long-does-a-new-drug-take-to-go-through-clinical-trials

2. Darzi A. Summary letter from Lord Darzi to the Secretary of State for Health and Social Care [Internet]. GOV.UK. 2024. Available from: https://www.gov.uk/government/publications/independent-investigation-of-the-nhs-in-england/summary-letter-from-lord-darzi-to-the-secretary-of-state-for-health-and-social-care

3. Marr B. A short history of ChatGPT: How we got to where we are today [Internet]. Forbes. 2023. Available from: https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today/

4. Hutson M. How AI Is Being Used to Accelerate Clinical Trials. Nature. 2024 Mar 13;627(8003):S2–5.

5. Askin S, Burkhalter D, Calado G, Samar El Dakrouni. Artificial Intelligence Applied to clinical trials: opportunities and challenges. Artificial Intelligence Applied to clinical trials: opportunities and challenges [Internet]. 2023 Feb 28;13(2). Available from: https://link.springer.com/article/10.1007/s12553-023-00738-2

6. Subbiah V. The next generation of evidence-based medicine. Nature Medicine [Internet]. 2023 Jan 16;29(29):1–10. Available from: https://www.nature.com/articles/s41591-022-02160-z

7. MHRA Data strategy 2024 -2027 [Internet]. [cited 2025 Jul 23]. Available from: https://www.gov.uk/government/publications/mhra-data-strategy-2024-2027/mhra-data-strategy-2024-2027

8. Reimers N, Gurevych I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks [Internet]. arXiv.org. 2019. Available from: https://arxiv.org/abs/1908.10084

9. Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention Is All You Need [Internet]. arXiv. 2017. Available from: https://arxiv.org/abs/1706.03762

10. Tolgahan Cakaloglu, Szegedy C, Xu X. Text Embeddings for Retrieval from a Large Knowledge Base. Lecture notes in business information processing. 2020 Jan 1;338–51.

11. Huang Y, Tengchao Lv, Cui L, Lu Y, Wei F. LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking. arXiv (Cornell University). 2022 Apr 18;

12. Tang X, Li X, Ding Y, Song M, Bu Y. The pace of artificial intelligence innovations: Speed, talent, and trial-and-error. Journal of Informetrics [Internet]. 2020 Nov 1;14(4):101094. Available from: https://www.sciencedirect.com/science/article/abs/pii/S1751157720301991

The rapid pace of AI research meant many of the most relevant advances were available only on arXiv, a moderated but non–peer-reviewed repository of research. While this limited the ability to cite recent peer-reviewed studies, the project’s priority was on practical implementation and delivering a production-ready solution rather than academic publication.